Picture this: you're fine-tuning a voice AI model, and it nails commands in crisp Midwestern English every time. But switch to a thick Boston accent or a lilting Nigerian one, and suddenly it's stumbling over simple words like "water" or "schedule." Frustrating, right? That's the reality for many voice AI engineers in 2025, where accents aren't just quirks—they're roadblocks to building truly inclusive systems. Research shows that automatic speech recognition (ASR) models can see word error rates jump as high as 44% for accented English compared to standard variants, leaving non-native speakers out in the cold. And it's not getting better fast enough; even with overall improvements in voice tech, biases persist, with error rates for Black speakers hovering around 35% versus 19% for white speakers in some studies. The Stanford AI Index underscores this gap, noting that while AI capabilities have surged by over 40% in recent years, accent diversity in training data remains a weak spot, amplifying real-world inequities.

The trouble often starts with how we gather data. Old-school methods rely on polished recordings in quiet studios, which do a poor job mimicking the chaos of daily life—think bustling markets or echoey offices. That leaves models unprepared for the phonetic twists accents bring, like rolled Rs or elongated vowels. For voice AI engineers, this means rethinking AI voice data collection techniques from the ground up to prioritize accent adaptation in voice AI.

Getting Real: Strategies for Authentic Adaptation

One solid way to tackle this is by pulling in recordings from everyday settings, where people speak naturally amid noise and interruptions. Crowdsourcing helps here, letting contributors record on their phones while going about their day. Then there's transfer learning: you take a general-purpose model and tweak it with targeted accent data. I've seen engineers simulate variations by tweaking audio filters—maybe drawing out syllables for Southern drawls or sharpening consonants for Scottish burrs. This isn't guesswork; it's about building resilience.

To really scale up, though, decentralized networks are changing the game. These DePIN setups turn ordinary devices into data hubs, crowdsourcing without the hassle of centralized servers. Silencio stands out as a prime example. This network taps into smartphones globally to snag ambient sounds and voice clips, all while keeping things private and rewarding participants. They've clocked over 4,500 hours of recordings across 26 languages and accents, pulling in hundreds of thousands of uploads from real environments. What makes it click for accent adaptation? The data's raw and varied, helping models cut word error rates by 15-28% in tough scenarios, based on adaptation benchmarks. It's like giving your AI ears that actually listen to the world as it is.

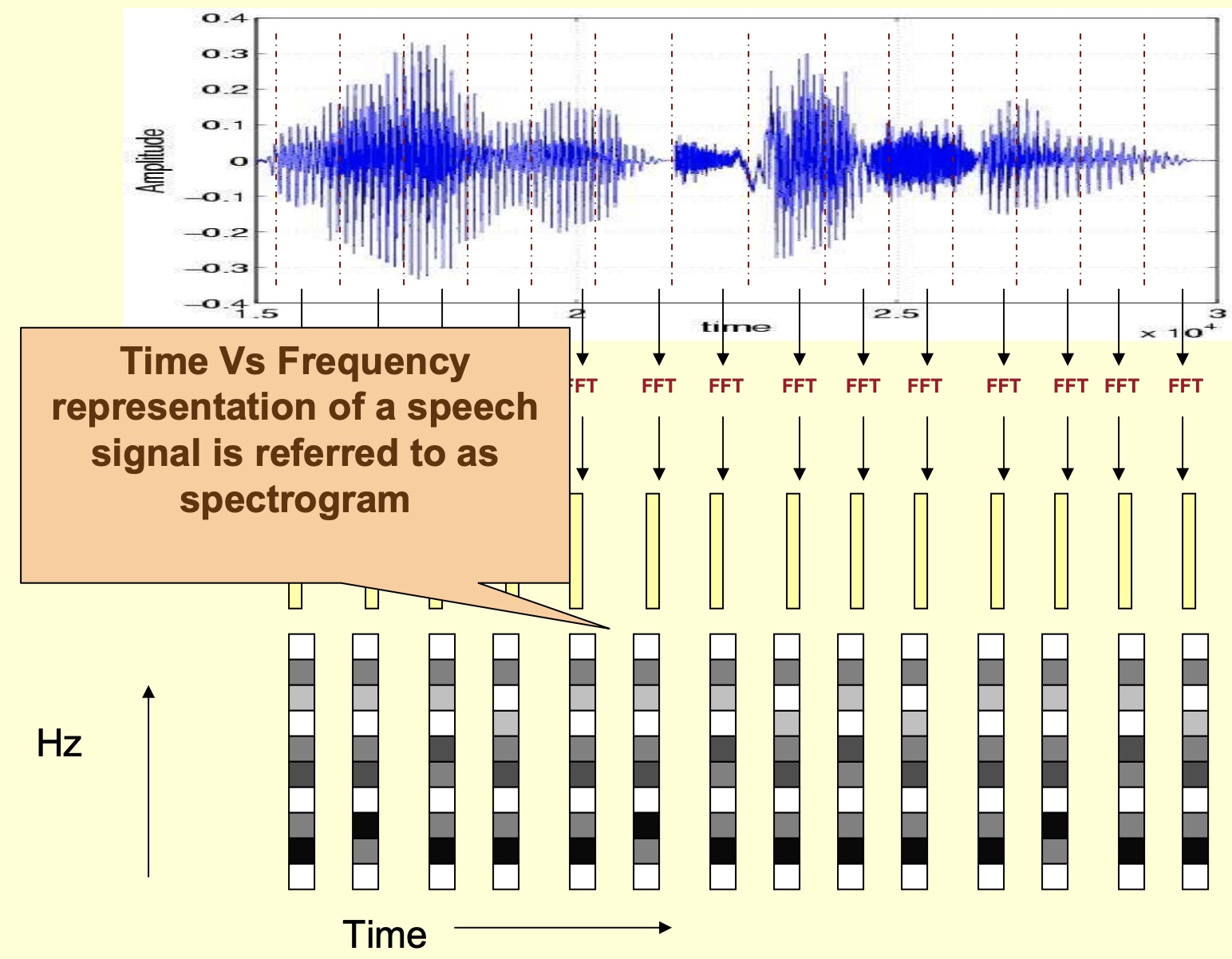

To make this concrete, let's look at some visuals from typical datasets. These waveforms highlight how accents shift patterns in amplitude and frequency, which is crucial when you're debugging why a model trips up on certain phonemes.

muneebsa.medium.com

If you're itching to experiment, dive into open-source gems. Mozilla's Common Voice dataset is a go-to, packed with crowd-sourced clips from all sorts of dialects—grab it here. For something more structured, the TIMIT corpus on GitHub offers detailed American English across eight accents, perfect for initial tests. And OpenSLR has a trove of dialect-focused recordings ready for your pipelines.

Bringing It Home: Robotics and Everyday Wins

These techniques pay off big in the field, especially for robotics where voice commands need to work flawlessly across crews. Imagine a factory robot responding to instructions from a team with mixed accents—Indian, Australian, you name it—without hiccups. Field trials show accent-tuned models halving mishears in noisy spots, making interactions smoother and safer. Looking ahead, projections have voice tech powering up to 95% of customer interactions by year's end, from chatbots to smart assistants. But to go global, you often need pros who handle the nuances of localization. Companies like Artlangs Translation fit the bill, with expertise in over 230 languages built from years of work in translations, video and game localization, short drama subtitling, and multilingual dubbing for audiobooks. Their track record of standout projects ensures voice AI doesn't just work—it connects culturally.