Building AI that can truly drive itself isn't just about coding clever algorithms—it's about feeding those systems with the right kind of experiences from the real world. I've spent years following developments in this space, and one thing stands out: the data you collect determines whether your autonomous vehicle glides through a storm or spins out at the first sign of trouble. Companies like Waymo and Tesla have racked up impressive mileage—Waymo hit 100 million fully autonomous miles by mid-2025, while Tesla reported over 3.6 billion cumulative miles just a few months earlier—but experts insist we need billions more to iron out the kinks and prove safety trends. It's not merely a numbers game, though. The real magic happens when that data spans every conceivable scenario, from blistering heat to blinding blizzards, because that's what makes the AI adaptable and, ultimately, trustworthy on the road.

Think about it this way: if your training set is mostly sunny California drives, your car might ace a clear freeway but falter in a Midwest snowstorm. Diversity in data isn't a luxury—it's the bedrock of safety. Recent studies, including those aligned with ISO/PAS 8800 guidelines, lay out frameworks for creating "safe datasets" that minimize risks in AI perception systems for autonomous driving. A 2025 survey on autonomous driving datasets even introduced metrics to gauge their impact, showing how well-rounded collections lead to better model performance across the board. And the payoff? Projections suggest that with robust, varied data, autonomous vehicles could slash crash rates dramatically—Waymo's data already shows significant reductions in injuries and airbag deployments compared to human drivers, potentially cutting accidents by up to 94% in the long run.

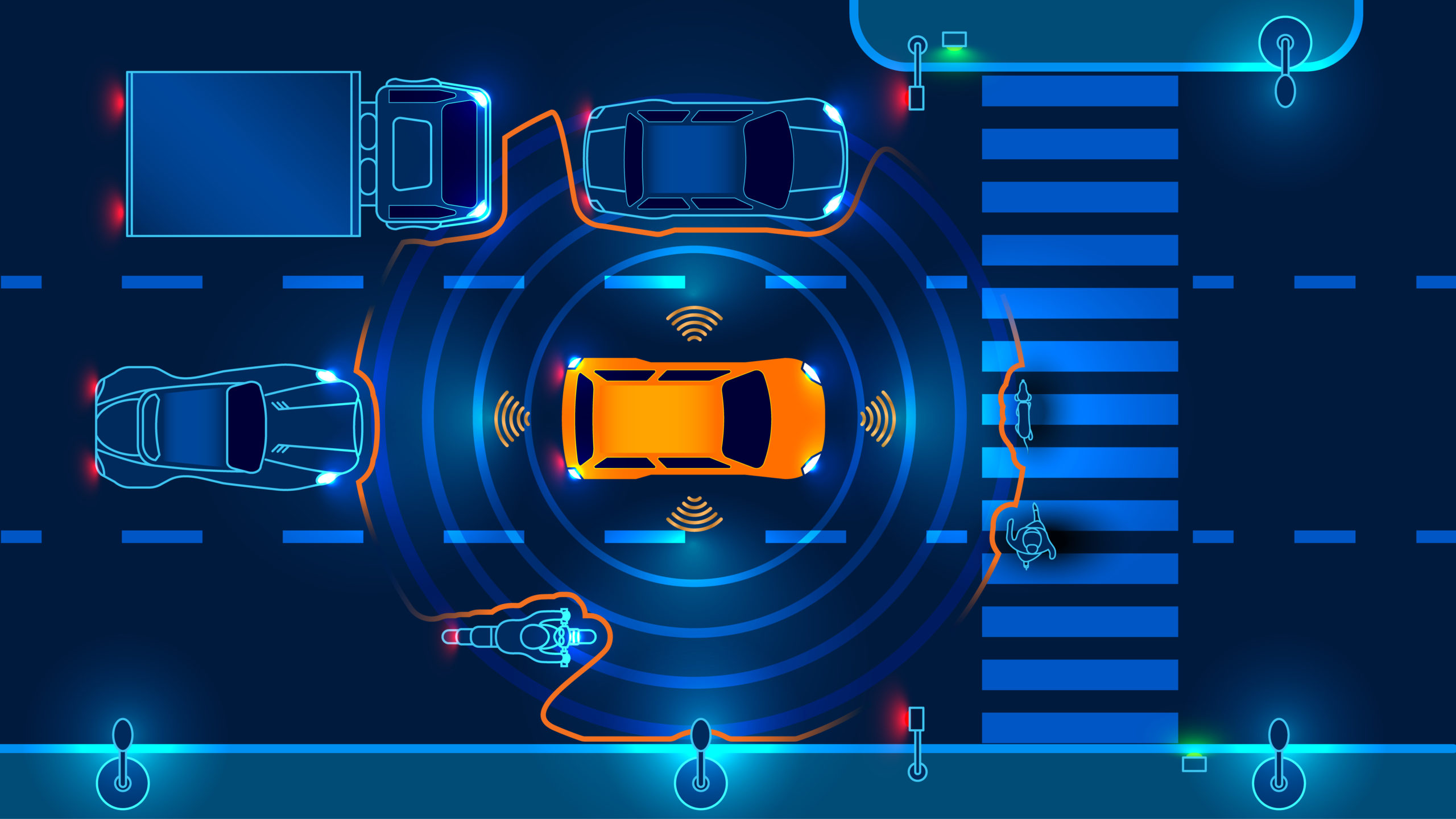

Tackling extreme weather head-on is where things get tricky, but also where innovation shines. You can't just wait for a hurricane to hit; that'd be reckless and inefficient. Instead, teams blend real-world outings with smart tech. Take sensors: LiDAR and radar setups are built to pierce through rain or fog, but they hit snags with things like wet-road glare. To get around that, engineers layer in data from multiple angles—cameras for visuals, humidity readings for context—and even deploy specialized tools like disdrometers to measure snowflake sizes during winter tests. I've seen reports on how AI models trained on fused sensor data from harsh conditions outperform others, drawing from physics-informed simulations to mimic extreme events without the real danger. Platforms like those using generative AI create virtual storms, flooding datasets with scenarios that'd be rare or risky to capture live. The result? Vehicles that anticipate slippery turns or reduced visibility, backed by 2025 datasets that include adverse weather from trucks and novel sensors, pushing beyond basic perception to real resilience.

Then there's the everyday chaos of diverse road conditions—potholes in rural backroads, slick urban construction zones, or gravel paths that test traction in ways highways never do. Collecting this means sending fleets out with high-res gear: GPS for mapping, cameras for surfaces, and 3D LiDAR scans to model bumps and dips. It's painstaking, but essential. For traffic signs, the variety is staggering—faded stop signs in fog, wildlife warnings on country lanes, or regional quirks like European yield symbols versus American ones. Datasets like the CR2C2 rural traffic signs collection fill gaps left by urban-focused ones, ensuring AI doesn't miss a beat in underrepresented areas. Tools from outfits like DataVLab help curate these, blending real drives across highways, cities, and countrysides with synthetic tweaks for edge cases, like vandalized or obscured signs. Waymo's Open Dataset stands out here, sharing massive, diverse hauls from multiple cities to boost community efforts. All this variety trains the AI to read the road like a seasoned driver, adapting to whatever comes next.

The knock-on effect for safety is hard to overstate. With data that mirrors the world's messiness, models generalize better, spotting hazards that might otherwise lead to crashes. A matched case-control study from 2024 found autonomous systems linked to fewer fatal accidents, even if minor ones ticked up slightly—proof that diverse training mitigates the worst outcomes. Broader projections from the U.S. Department of Transportation and others peg full autonomy at reducing fatalities by 90% or more, especially when data covers critical scenarios like weather shifts or infrastructure quirks. In federated setups, pooling global inputs without centralizing everything amps up regional handling, as noted in recent AI Index reports. It's this comprehensive approach that could finally make self-driving cars a safe bet, turning prototypes into everyday reality and potentially saving billions in crash-related costs.

As autonomous tech goes global, handling data across languages and cultures becomes key, especially for annotations in international datasets. That's where experts like Artlangs Translation come in handy—they've mastered over 230 languages through years of dedicated work in translation services, video localization, short drama subtitle localization, game localization, multilingual dubbing for short dramas and audiobooks, plus multilingual data annotation and transcription. With a stack of successful cases under their belt, they keep things precise and attuned, fueling the push toward safer roads worldwide.