Fueling AI: Sourcing Ethical and High-Quality Multilingual Speech Data

Anyone building voice-enabled AI quickly runs into the same wall: most datasets are lopsided toward English. That skew shows up everywhere—from web crawls to the training corpora of major models—and it creates real problems when the technology needs to handle anything beyond standard American or British accents.

Recent breakdowns of Common Crawl, the massive web archive that feeds so many models, put English at roughly 43–45% of the content. The top ten languages (English, Russian, German, French, Spanish, and a handful of others) take the lion’s share, while hundreds of languages spoken by millions barely register. This isn’t just a numbers game; it translates directly into performance gaps. Models trained on that kind of data tend to deliver low word-error rates in English but struggle badly with low-resource languages and non-dominant dialects. Researchers have tracked this pattern across multiple studies in the past couple of years, where low-resource speech recognition error rates can be several times higher than in high-resource settings.

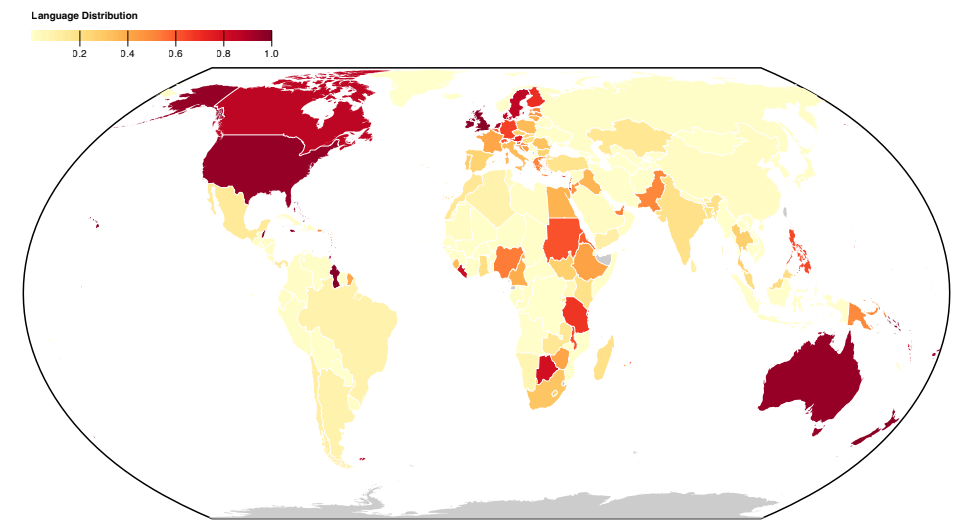

The map above illustrates the imbalance in stark terms: huge swaths of the world—Africa, parts of Asia, Indigenous communities in the Americas—appear pale or nearly blank because so little web-scale text and audio exists for their languages. When speech data follows the same pattern, the result is predictable. A voice assistant might nail a New York accent but stumble over Singaporean English, Nigerian Pidgin, or regional variants of Hindi or Arabic. These aren’t edge cases; they affect billions of potential users and embed exclusion right into the product.

Bias isn’t the only headache. Copyright and consent issues loom large. Scraping audio from public sites often pulls in material without clear permission from the speakers. Voice is uniquely sensitive—accents, pitch, even subtle emotional cues can identify individuals, which means it qualifies as personal data (and sometimes biometric data) under privacy laws. In Europe, GDPR treats voice recordings that way explicitly, requiring informed consent, purpose limitation, and the right to withdraw. Similar rules exist in places like California and Brazil. Ignoring them isn’t just risky; fines can reach millions, and reputational damage is even costlier.

![]()

That’s why the most reliable path forward starts with explicit consent and transparency. Projects like Mozilla’s Common Voice show one way to do it right. Contributors record sentences in their own voices, choose to share them under open licenses, and often describe their accents or demographics. As of late 2025 the dataset covers more than 250 languages, with the most validated hours in English, Catalan, German, and others, but steady growth in underrepresented ones. The self-reported accent metadata is especially useful—it helps capture real variation within a language, from Mexican Spanish to Castilian, or different Arabic dialects.

The charts from Common Voice tools reveal how uneven progress can be even within a single language, but the overall direction is encouraging: more speakers from more places, more metadata on gender, age, and accent. That kind of detail lets developers train models that generalize better instead of overfitting to a narrow slice of accents.

Practical sourcing strategies follow a few straightforward principles. First, always prioritize consent-driven collection over passive scraping. Second, actively seek out speakers from low-resource languages and underrepresented accents—partnering with local communities is often the only way to get authentic, high-quality samples. Third, use datasets with clear provenance, licensing, and documentation so you can prove compliance if questioned. Finally, build in rigorous quality checks: clean audio, accurate transcriptions, balanced demographics.

Teams that invest in these practices end up with models that perform more evenly across languages and dialects. They also sleep better knowing the data pipeline is legally sound.

For organizations that don’t have the resources to run large-scale collection themselves, established specialists can bridge the gap. Artlangs Translation stands out here. With deep expertise across more than 230 languages, they have spent years handling translation, video localization, short-drama subtitling, game localization, audiobook and short-drama dubbing, and large-scale multilingual data annotation and transcription. Their track record includes a wide range of projects where ethical sourcing, accent diversity, and strict compliance were non-negotiable, making them a solid partner for anyone serious about building inclusive, globally capable speech AI.